Nagoya Marimbas

A Whitney inspired visualisation of Steve Reich’s Nagoya Marimbas as arranged by Gravity Percussion

8th February 2015 – premiered at the Royal Northern College of Music’s Day of Percussion

RNCM, 124 Oxford Road, Manchester, M13 9RD

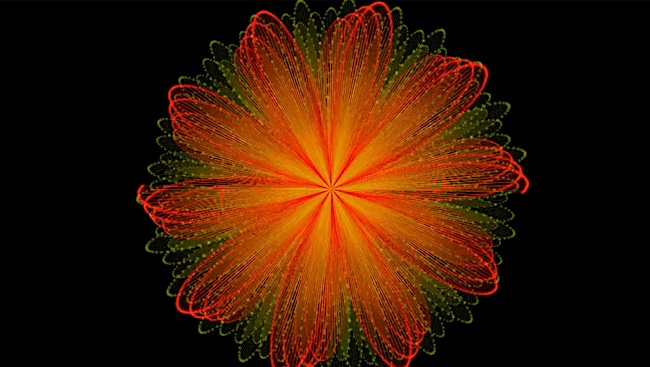

This new work, in collaboration with Gravity Percussion, explores the counterpoint in Steve Reich’s Nagoya Marimbas by visualising the two interlocking marimba parts each as a dynamic “Whitney Rose” – occasionally connected together to display the harmonic relationship between them.

Premiered at the Royal Northern College of Music’s Day of Percussion, 8th February 2015 it was subsequently performed at the RNCM Percussion Ensemble Concert on 23rd April 2015 and screened as part of a live AV performance by Lewis Sykes of Monomatic and Mike & Kayo Blow of Blows in the Immersive Vision Theatre (IVT), Plymouth University, 27th November 2015.

The work is an audiovisual study of counterpoint – the harmonically interdependent parts in a piece of music that are independent in rhythm and contour – distinctive to the Baroque Period yet contemporarily explored through Reich’s “repeating patterns played on both marimbas, one or more beats out of phase, creating a series of two part unison canons”. The red dynamic rose pattern follows the first marimba part (panned left) while the yellow pattern follows the second marimba part (panned right). In visualising the harmonic structure within the music the rose variants display a wide range of forms – from complex ‘spirograph’ or ‘string art’ like 2D patterns to curvaceous, 3D vessel-like shapes and exploded helixes of sinusoidal lines.

John Whitney Sr. is considered by many to be the godfather of modern motion graphics. “Beginning in the 1960s, he created a series of remarkable films of abstract animation that used computers to create a harmony – not of colour, space, or musical intervals, but of motion” (Alves, 2005:46). He championed an approach in which animation wasn’t a direct representation of music, but instead expressed a “complementarity” – a visual equivalence to the attractive and repulsive forces of consonant/dissonant patterns found within music. Whitney generated motion graphic patterns through programs of simple algorithms run on early computers, output step-by-step to a cathode ray tube (CRT) monitor and then captured frame-by-frame on a film camera. These figures, often reminiscent of naturalistic forms such as flowers and traditional geometric Islamic art, would resolve periodically from apparent disorder – points of dissonance – into distinct alignments – points of consonance. He argued that these points of resolution could be seen as a visual equivalent to the harmonic structure within the music.

Reich’s original musical score, transcribed into and arranged within Sibelius by Peter Mitchell of Gravity Percussion, was subsequently exported as a MIDI file. It was then loaded into Ableton Live Suite 9.1 and routed to both the visualisation and two Kontakt 5 ‘SonoKinetic Mallets’ instruments via a custom-made Teensy 2.0 micro-tuner, retuning the 12-ET MIDI notes to a Thomas Young Temperament (circa. 1799). Switching between the numerous visualisation presets and camera positions was automated via additional MIDI tracks sequenced against the marimba parts.

Nagoya Marimbas is part of a series of real-time, code-based audiovisual works inspired by, interpreting and extending Whitney’s animated films. Building on his aesthetic of simple dot and line and dynamic geometric form it re-imagines his intricate two-dimensional patterns as complex three-dimensional shapes. More significantly, it also takes the next step of integrating music and its inherent harmony as the driving force for those visual forms, something that Whitney – despite his ideas on how to apply concepts of the Pythagorean musical laws of harmony to the visual art of motion – never fully realised.

A next stage development of Whitney Triptych – a piece that first featured as part of a performance at Seeing Sound 3, Bath Spa University, UK, 24th November 2013 – it extends an existing set of short, abstract audiovisual works created as part of Lewis Sykes’ recently completed Practice as Research Ph.D. and ongoing collaborative practice with Ben Lycett. This growing body of work attempts to show a deeper connection between what is heard and what is seen by making the audible visible – looking for similar qualities to the vibrations that generate sound but in the visual domain, they try to create an amalgam of the audio and visual where there is a more literal ‘harmony’ – a connection between sound and moving image which is more ‘harmonious’, elementary and direct and which attempts to communicate in a way which is not just seen and heard – but is instead ‘co-sensed’ or ‘seenheard’.

This work demonstrates Sykes & Lycett’s rolling development towards a custom-made, real-time, music visualisation system within openFrameworks, the open source C++ toolkit for creative coding but also using custom-made micro-tuning hardware based on the Arduino micro-controller electronics prototyping platform and commercial audio production tools.

References:

Alves, B. (2005) ‘Digital Harmony of Sound and Light.’ Computer Music Journal, 29(4) pp. 45-54.